|

62 comments

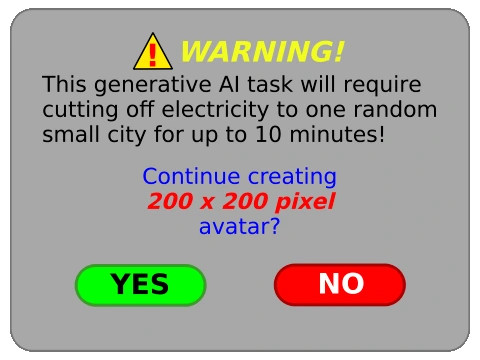

@catsalad @ReneDamkot that's the reason why I speeded up my efforts to move away from Android on my phone. (Years ago I moved from Microsoft to Linux already) Part is the AI story. @LInearness I bought a Fairphone on which you can easily install /e/OS or buy it with it. @catsalad I think this is blown out of proportion, especially considering that genAI models for image generation aren't that big and can run on consumer grade hardware. @starsider Yes, but my point is that this prompt is kind of blowing unrelated things out of proportion. An image at this sort of size wouldn't even take a second on a 4090, meaning the amount of energy consumed is going to be pretty low. @tofu Yes, but we should keep all costs in mind. If there's a high enough demand of local models there will be also demand for online services and for training new models. Making AI art should be frowned upon, and this is just one of the reasons. @tofu I would agree with @starsider . You can run models like stable diffusion on your own hardware. Get one of those little power plugs that counts electricity usage. Connect everything. You'll see that generating your image won't take anywhere near the power consumption of a small city. That missinformation is not helpful - AI has it's problems, but you shouldn't go around and tell people that generating one image will boil the oceans @mschfr @tofu @starsider None of this would even matter if we could just get our collective shit together as a society and make a big push for green(er) energy infrastructure. Who cares how much energy training and inference takes if it's coming from solar, wind, or nuclear power? We fight over the wrong things, imo. @wagesj45 Yeah, but it's not easy to pull off, especially with people and governments steering from actual green options like nuclear power. @tofu @wagesj45 I wouldn't agree with your nuclear power take, but even if so: The majority of the AI data centers are going up somewhere in the USA and the USA is far from steering away from nuclear power. And even we here in Germany are at the lowest CO2/kWh since sometimes in the 19th century even after switching off all nuclear @starsider @tofu Yeah, but even 20x the energy consumption would give it the CO2 impact of 1,5 month of one single cruise ship. And only a few big players worldwide can even think about training such a model. @catsalad @blaue_Fledermaus @catsalad Exactly. Once a GenAI model exists, it doesn't need more power to generate a 1024x1024 image than playing a high-end computer game at highest detail settings on a big PC with a decent GPU for about a minute. @flying_saucers @catsalad that's suggesting Llama 3.1 8B and 405B have comparable outputs, or the like. We can run small models at home relatively quickly, sure, but that's not what the likes of OpenAI or Meta have running behind their flagship APIs. If you're curious you can compare the recommended hardware for the different variants of the Llama models here: https://llamaimodel.com/requirements/ (I use this as an example because the comparison is so clear.) @catsalad As long as that Children's Hospital has diesel generator backup, what's the downside? @catsalad eheheh, generative AI and LLMs, as they are implemented today, are probably the most inefficient brains ever made! The only positive thing about this is that they are human-built brains. :) @catsalad Paris Marx made a 4 episodes Tech Won't Save Us mini series about the costs of AI and big data recently. Here's the 1st episode: https://techwontsave.us/episode/241_data_vampires_going_hyperscale_episode_1 @catsalad If it was like The Box, and reminded you that someone else pushing the button could be affecting your city @catsalad |

@catsalad

So what I took down the entire Icelandic power grid generating a wallpaper. 4k Moopsy wallpapers don't generate themselves, ya know