|

8 comments

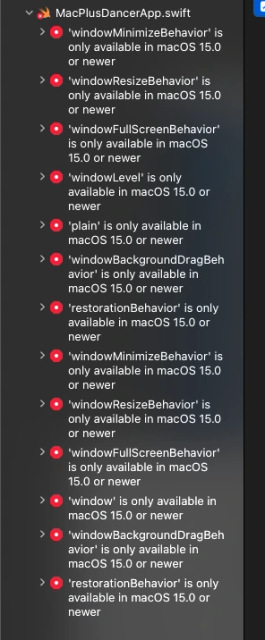

@samhenrigold omg. Amazing. Now I am thinking can I port this to a Konami code JavaScript file for websites. "it has no business requiring the very latest OS, let me lower the deployment target real quick", I thought @grishka the window stuff in 15 is soooo convenient. i didn't even have to dip into NSApp this time (aside from the video player representable) Some implementation details: In the original product from 2003, each dancer had two videos — the dancer on a black background and a white-on-black matte. This saved a ton of space on the CD-ROM since transparent videos were (and still are) enormous. They would just composite them together on the system to make a transparent video. I’m doing the same thing in my implementation. I use an AVMutableComposition to sync up the two video tracks and handle the timeline-y stuff... ...and for the actual frame-by-frame compositing, I’m leaning on AVVideoCompositing. The custom compositor uses CIBlendWithMask to blend the main video with the matte. I’ve never dug super deep into AVFoundation, I was kinda surprised by how easy it was to do this (~70 lines). Most of the work here was just setting up the scaffolding around it. I didn’t need to dive into shader land or anything of the sort. https://github.com/samhenrigold/MacPlusDancer/blob/main/MacPlusDancer/CustomVideoCompositor.swift |

@samhenrigold this is incredible